Experience in designing and developing SSIS packages for loading data from Oracle, text files, Excel, Flat files to SQL Server database.Deep knowledge of RDBMS (SQL Server, MySQL, DB2 etc) and NoSQL databases such as MongoDB, DynamoDB and Cassandra.Experience in writing shell scripting for various ETL needs.Experience in performing Data Masking/Protection using Pentaho Data Integration (Kettle).Good Experience in use of other ETL and reporting tools like DTS, SSIS, SSRS, SSAS and Crystal Reports.Experience in using Pentaho report Designer to create pixel-perfect reports of data in PDF, Excel, PL/SQL, SQL, Confidential /SQL, JavaScript, HTML, Text, Rich-Text-File, XML and CSV.Worked on the business requirements to change the behavior of the cube by using MDX scripting.Created ETL jobs to load Twitter JSON data and server data into MongoDB and transported MongoDB into the Data Warehouse.Loaded unstructured data into Hadoop File System (HDFS).This is a Kettle plugin and provides connectors to HDFS, MapReduce, HBase, Cassandra, MongoDB, CouchDB that work across Pentaho Data Integration. Integrating Kettle (ETL) with Hadoop and other various NoSQL data stores can be found in the Pentaho Big Data Plugin.

#PENTAHO DATA INTEGRATION RESUME WINDOWS#

#PENTAHO DATA INTEGRATION RESUME SOFTWARE#

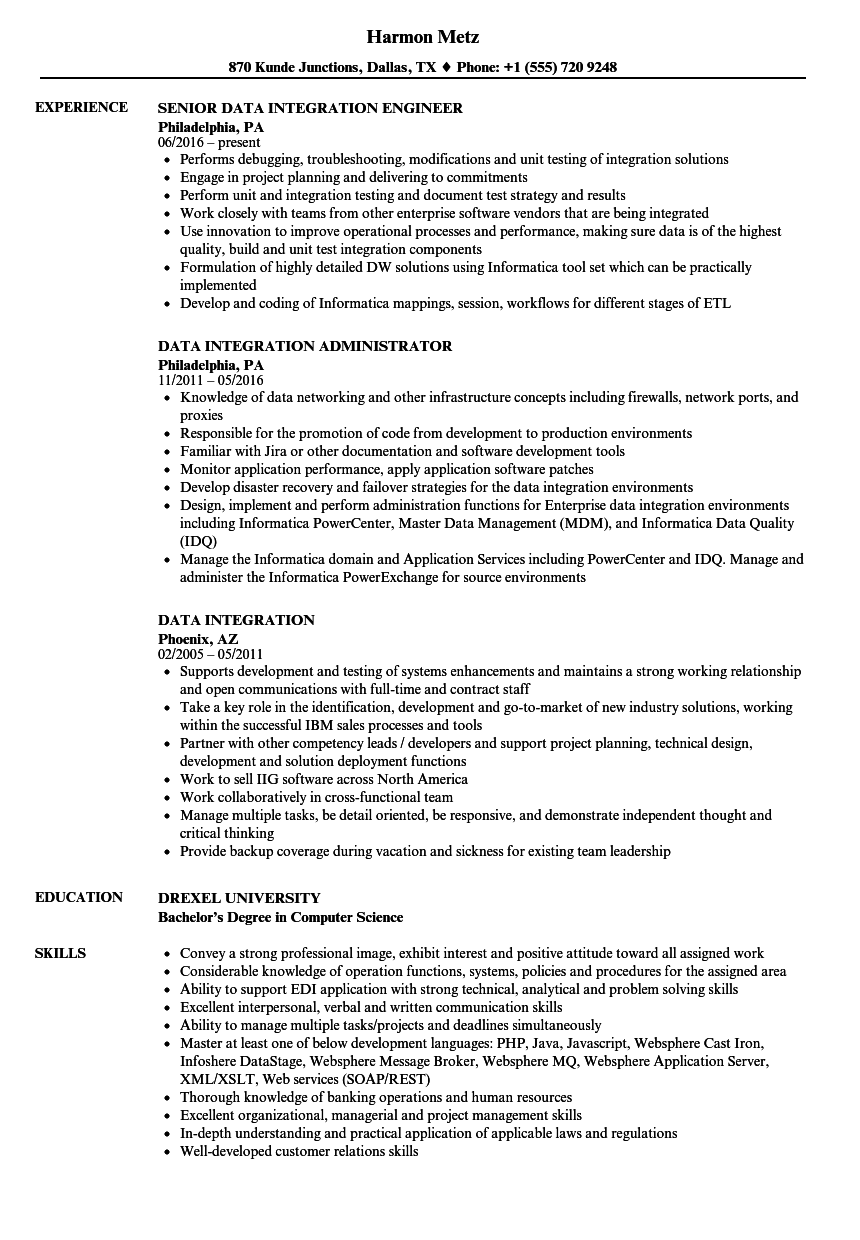

Knowledge about Software Development Lifecycle ( SDLC), Agile, Application Maintenance Change Process ( AMCP).Experienced in Design, Development and Implementation of large - scale projects in Financial, Shipping and Retail industries using Data Warehousing ETL tools (Pentaho) and Business Intelligence tool.Over 8+ years of experience in the field of Information Technology with proficiency in ETL design/development and Data Warehouse Implementation/development.

0 kommentar(er)

0 kommentar(er)